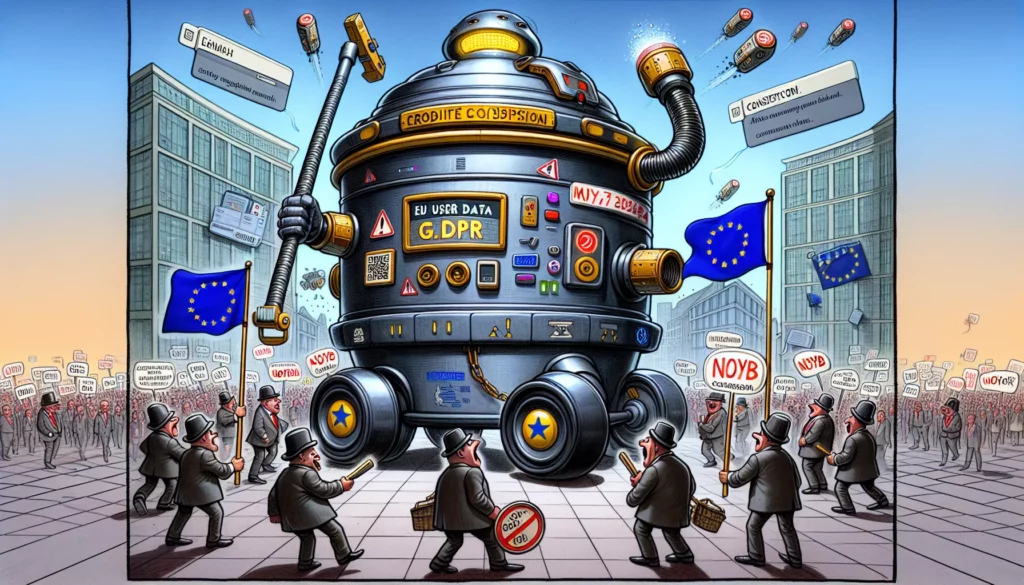

Meta Set to Utilize EU User Data for AI Training Starting May 27 Without User Consent; Noyb Signals Potential Legal Action

Austrian privacy advocacy group noyb (None of Your Business) has issued a cease-and-desist letter to Meta’s Irish headquarters, threatening a class action lawsuit should the company continue its plan to utilize user data for training its artificial intelligence (AI) models without explicit opt-in consent.

This action follows Meta’s recent announcement to resume training its AI models using publicly available data from Facebook and Instagram users within the European Union (E.U.) beginning May 27, 2025. This initiative was previously halted in June 2024 due to concerns raised by Irish data protection authorities.

Noyb asserts that Meta essentially exploits an alleged “legitimate interest” to indiscriminately gather user data, bypassing the requirement for opt-in consent. The group warns that Meta’s approach entails considerable legal risks because it relies on an “opt-out” mechanism rather than the legally mandated “opt-in” system for AI training.

Moreover, noyb emphasized that Meta AI’s operations do not comply with the General Data Protection Regulation (GDPR). They noted that while Meta claims a “legitimate interest” in collecting data for AI development, it simultaneously restricts the opportunity for users to opt out prior to data utilization.

The advocacy group elaborated that even if a mere 10% of Meta’s users grant permission for data usage, the volume of data generated would be sufficient for the company to effectively learn regional languages.

Historically, Meta has claimed that collecting such data is necessary for accurately reflecting the diverse linguistic, geographic, and cultural nuances of the E.U.

Max Schrems, a representative from noyb, criticized Meta’s strategy, asserting that the company’s reliance on an opt-out system rather than an opt-in system constitutes a substantial legal transgression. He argued that the rationale behind Meta’s data collection for AI training is unfounded, pointing out that other AI developers can achieve superior models without resorting to social media data.

Furthermore, noyb criticized Meta for shifting the burden onto users by advancing its plans without securing their consent, highlighting that national data protection authorities have largely been unresponsive regarding the legality of AI training without explicit user consent.

In a response shared with Reuters, Meta rejected noyb’s assertions, insisting that they are incorrect both factually and legally. The company maintained that it provides E.U. users with a clear option to refuse their data’s processing for AI training purposes.

This is not the first instance where Meta’s interpretation of GDPR’s “legitimate interest” has faced scrutiny. In August 2023, the organization agreed to transition from utilizing “legitimate interest” to a consent-based framework for processing user data in relation to targeted advertising.

The context of this situation is further complicated by a recent ruling from the Belgian Court of Appeal, which declared the Transparency and Consent Framework, employed by Google, Microsoft, Amazon, and others for obtaining consent for data processing, to be illegal in Europe due to multiple violations of GDPR principles.