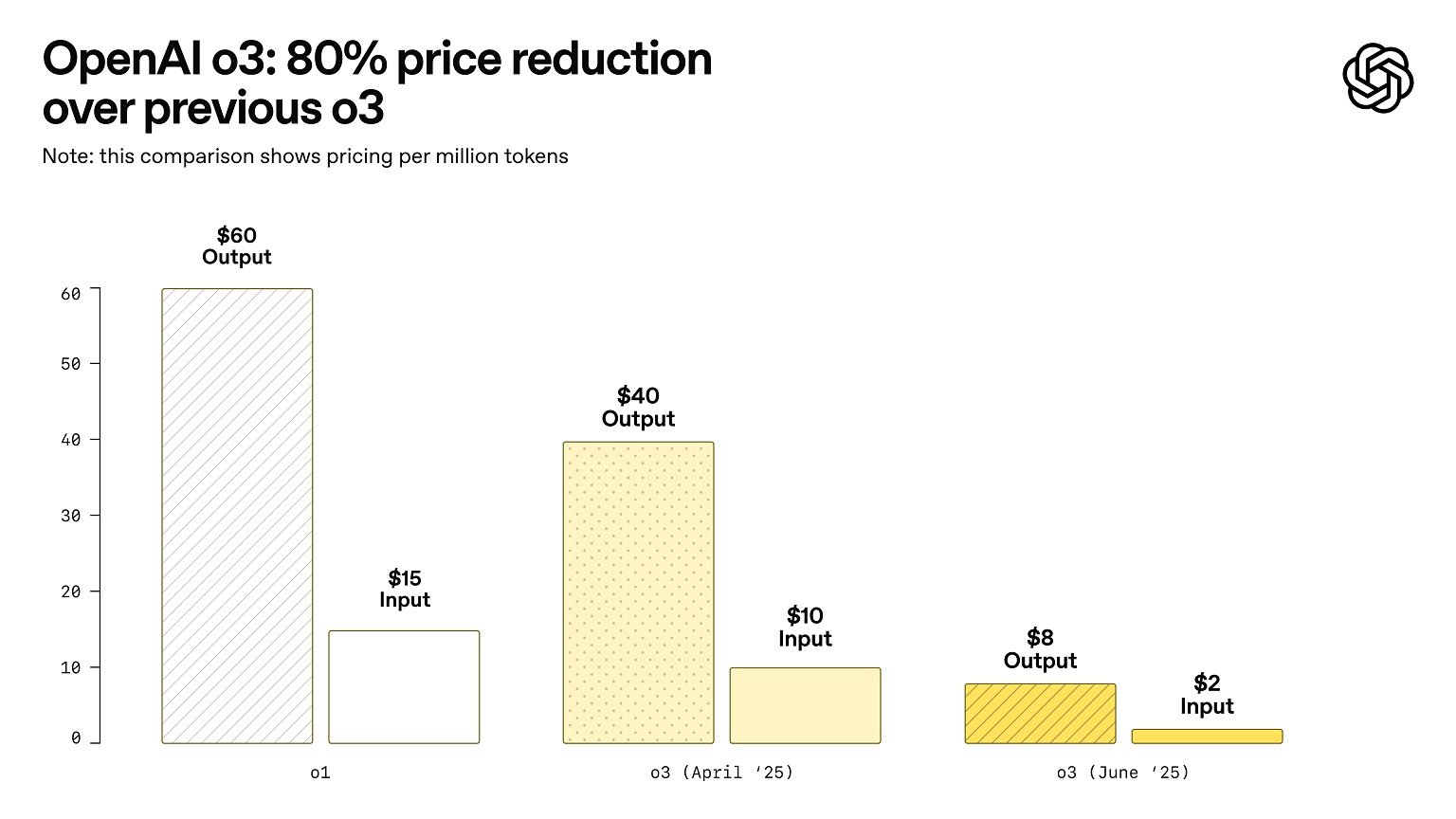

Impact on Performance Remains Unaffected by 80% Price Reduction of ChatGPT o3 API

The recent 80% price reduction of the ChatGPT o3 API by OpenAI presents a significant opportunity for developers without compromising performance standards.

On June 11, 2025, OpenAI announced this substantial price cut for its leading reasoning model, o3. The input cost per million tokens is now set at $2, while the output cost has dropped to $8 per million tokens.

This optimization was achieved without altering the original model. OpenAI has confirmed through a post on X that the “inference stack that serves o3 has been optimized,” ensuring the same model is now available at a significantly lower price point.

Although typical users may not interact with ChatGPT models via API, this price reduction facilitates a more affordable environment for API-dependent tools like Cursor and Windsurf.

Furthermore, the independent benchmarking community, ARC Prize, has verified the stability of the model’s performance post-price adjustment. In a subsequent post, they reported no difference in performance when comparing retest results with the original data, affirming that OpenAI has optimized the model’s infrastructure without a model swap.

In addition to the price adjustment, OpenAI has introduced the o3-pro model to the API, which employs enhanced computational capabilities to deliver superior results.